From U of T's The Varsity news page

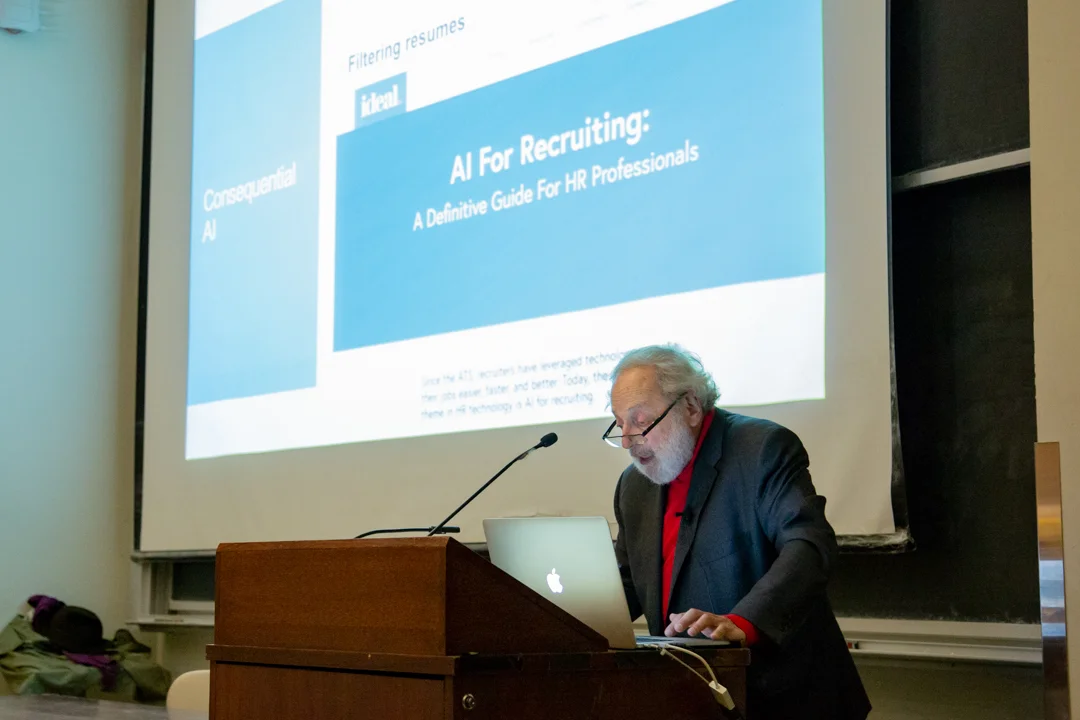

As advances in AI reach new heights, there are certain traits that AI systems must have if humans are to trust them to make consequential decisions, according to U of T Computer Science Professor Emeritus Dr. Ronald Baecker at his lecture, “What Society Must Require of AI.” In an effort to learn more about how society will change as artificial intelligence (AI) advances, I attended the talk on June 5 and left more informed of the important role that people have to play in shaping this fast-changing technology.

How is today’s AI different from past technologies?

AI has been used in the field of computer science for decades, yet it has recently been accelerating at a strikingly fast pace, with machine learning catalyzing progress in the field.

Machine learning has improved pattern recognition to such an extent that it can now make consequential decisions normally made by humans. However, AI systems that apply machine learning can sometimes make mistakes.

For many AI systems, this result is acceptable. Such nonconsequential systems rarely make life-or-death decisions, explained Baecker, and their mistakes are “usually benign and can be corrected by trying again.”

But for consequential systems, which are AI-based software that addresses more complex problems, such mistakes are unacceptable.

Problems could arise when using AI to drive autonomous vehicles, diagnose medical conditions, inform decisions made in the justice system, and guide military drones. Mistakes in these areas could result in the loss of human life.

Baecker said that the research community must work to improve consequential AI, which he explains through his proposed “societal demands on AI.” He noted that these demands must give AI human-like attributes in order to improve the decisions that it makes.

Would you trust consequential AI?

When we agree to implement solutions for complex problems, said Baecker, we normally need to understand the “purpose and context” behind the solution suggested by a person or organization.

“If doctors, police officers, or governments make [critical] decisions or perform actions, they will be held responsible,” explained Baecker. “[They] may have to account or explain the logic behind these actions or decisions.”

However, the decision-making processes behind today’s AI systems are often difficult for people to understand. If we cannot understand the reasoning behind an AI’s decisions, it may be difficult for us to detect mistakes by the system and to justify its correct decisions.

Two questions we must answer to trust consequential AI

If a system makes a mistake, how can we easily detect it? The procedures that certain machine learning systems use cannot be easily explained, as their complexity — based on “hundreds of thousands of processing elements and associated numerical weights” — cannot be communicated to or understood by users.

Even if the system works fine, how can we trust the results? For example, physicians reassure patients by explaining the reasoning for their treatment recommendations, so that patients understand what their decisions entail and why they are valid. It’s difficult to reassure users skeptical of an AI system’s decision when the decision-making process may be impossible to adequately explain.

Another real-life problem arises when courts use AI-embedded software to predict a defendant’s recidivism in order to aid in the setting of bonds. If that software system were inscrutable, then how could a defendant challenge the system’s reasoning on a decision that affects their freedom?

I found Baecker’s point fascinating: for society to be able to trust consequential AI systems, which may become integrated with everyday technologies, we must trust them like human decision-makers, and to do so, we must answer these questions.

Baecker’s point deserves more attention from us students, who, beyond using consumer technology every day, will likely experience the societal consequences of these AI systems once they are widely adopted.

Society must hold AI systems to stringent standards to trust them with life-or-death decisions

Baecker suggests that AI-embedded systems and algorithms must exhibit key characteristics of human decision-makers, with a list that he noted: “seems overwhelming.”

A trustworthy complex AI system, said Baecker, must display competence, dependability and reliability, openness, transparency and explainability, trustworthiness, responsibility and accountability, sensitivity, empathy, compassion, fairness, justice, and ethical behaviour.

Baecker noted that the list is not exhaustive — it omits other attributes of true intelligence, such as common sense, intuition, context use, and discretion.

But at the same time, he also recognized that his list of requirements is an “extreme position,” which necessitates very high standards for a complex AI system to be considered trustworthy.

However, Baecker reinforced his belief that complex AI systems must be held to these stringent standards for society to be able to trust them to make life-or-death decisions.

“We as a research community must work towards endowing algorithmic agents with these attributes,” said Baecker. “And we must speak up to inform society that such conditions are now not satisfied, and to insist that people not be intimidated by hype and by high-tech mumbo-jumbo.”

“Society must insist on something like what I have proposed, or refinements of it, if we are to trust AI agents with matters of human welfare, health, life, and death.”