This event is organized by the Fields Institute

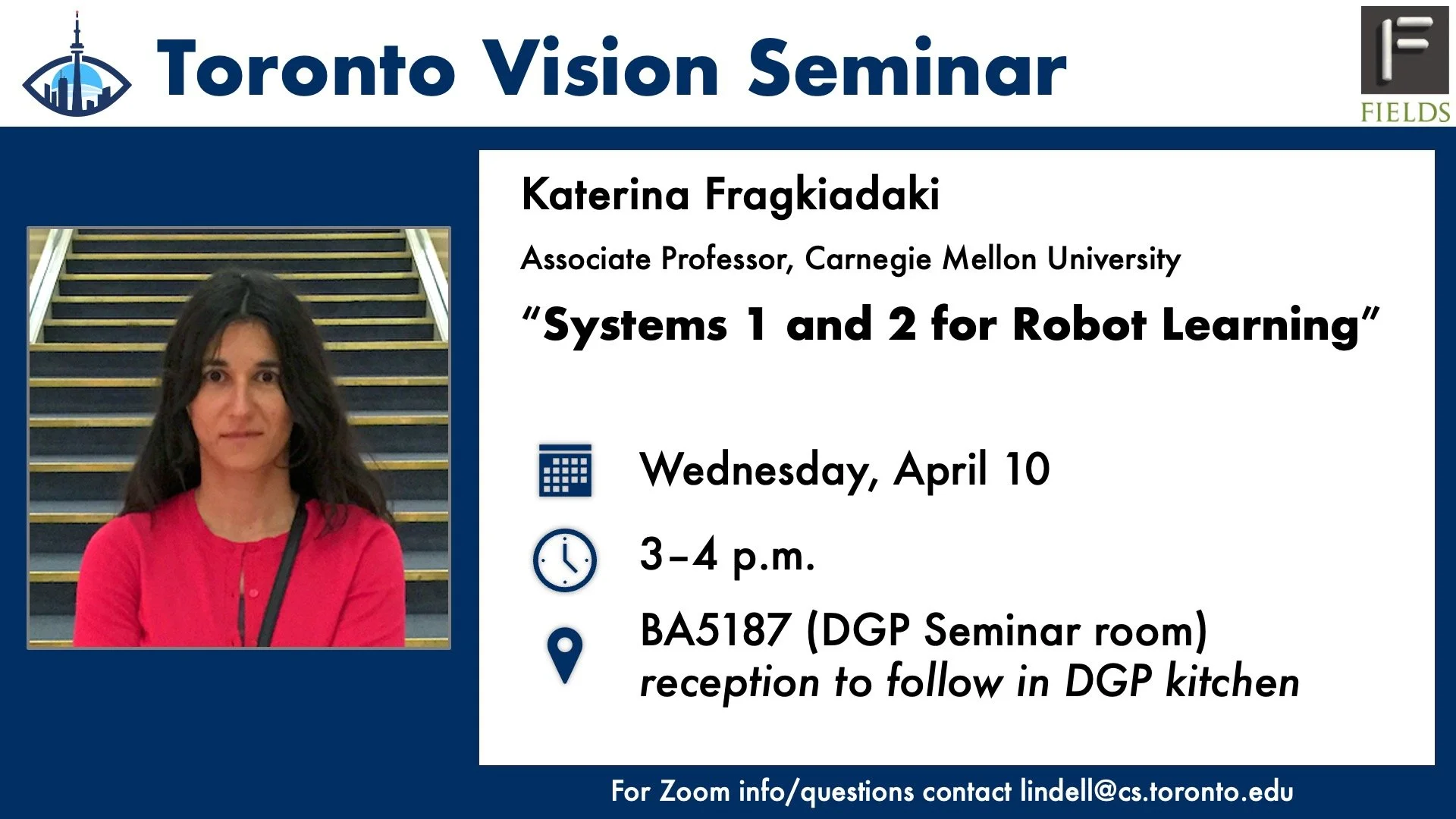

Toronto Vision Seminar with Katerina Fragkiadaki

Date: Wednesday, April 10

Time: 3 - 4 p.m.

Location: BA 5187 (DGP Seminar Room)

email lindell@cs.toronto.edu for online link

Talk Title: "Systems 1 and 2 for Robot Learning"

Abstract: Humans can successfully handle both easy (mundane) and hard (new and rare) tasks simply by thinking harder and being more focused. In contrast, today's robots spend a fixed amount of compute in both familiar and rare tasks, that lie inside and far from the training distribution, respectively, and do not have a way to recover once their fixed-compute inferences fail. How can we develop robots that think harder and do better on demand?

In this talk, we will marry today's generative models and traditional evolutionary search and 3D scene representations to enable better generalization of robot policies, and the ability to test-time think through difficult scenarios, akin to a robot system 2 reasoning. We will discuss learning behaviours through language instructions and corrections from both humans and vision-language foundational models that shape the robots' reward functions on-the-fly, and help us automate robot training data collection in the simulator and in the real world. The models we will present achieve state-of-the-art performance in RLbench, Calvin, nuPlan, Teach, and Scannet++, which are established benchmarks for manipulation, driving, embodied dialogue understanding and 3D scene understanding.

Bio: Katerina Fragkiadaki is an Assistant Professor in the Machine Learning Department in Carnegie Mellon University. She received her undergraduate diploma from Electrical and Computer Engineering in the National Technical University of Athens. She received her Ph.D. from University of Pennsylvania and was a postdoctoral fellow in UC Berkeley and Google research after that. Her work focuses on combining forms of common sense reasoning, such as spatial understanding and 3D scene understanding, with deep visuomotor learning. The goal of her work is to enable few-shot learning and continual learning for perception, action and language.