The University of Toronto Department of Computer Science has an impressive presence at the 16th ACM SIGGRAPH Conference and Exhibition on Computer Graphics and Interactive Techniques in Asia, with 14 technical papers accepted, including one that received a best paper award.

SIGGRAPH Asia, taking place from December 12 to 15 in Sydney, Australia is bringing together technical and creative people from around the world, showcasing the latest research and innovation in computer graphics and interactive techniques.

The research being presented by our faculty and students covers a range of topics including using neural networks to efficiently render highly detailed 3D scenes; numerical techniques for more accurate vector graphics; neural networks for collision detection; conversion between implicit and explicit representations for surface reconstruction of 3D objects; a simulation method to capture the dynamic nature of mixed materials, and more.

Here is a closer look at the 14 U of T technical papers accepted to the conference.

Best Paper Award: Adaptive Shells for Efficient Neural Radiance Field Rendering

Zian Wang, Tianchang Shen, Merlin Nimier-David, Nicholas Sharp, Jun Gao, Alexander Keller, Sanja Fidler, Thomas Muller and Zan Gojcic

The paper presents a novel neural radiance formulation that generates images with high visual fidelity and greatly reduces computational cost. The proposed method models the scene complexity and derives an acceleration structure on top. It turbocharges rendering speed up to 10 times faster than older methods, enabling new opportunities for 3D content creation.

“Winning the Best Paper Award is an incredible honour for our team! The recognition inspires us to continue our dedication to advancing the field, and pushing the frontiers of research.” — Fifth-year PhD student Zian Wang and joint first author.

“This milestone in our research journey is the result of our team's awesome collaborative effort, and it deepens my enthusiasm for breaking new ground in the vibrant field of computer graphics!” — Third-year PhD student Tianchang Shen and joint first author.

An Adaptive Fast-Multipole-Accelerated Hybrid Boundary Integral Equation Method For Accurate Diffusion Curves

Seungbae Bang, Kirill Serkh, Oded Stein, Alec Jacobson

Zooming in on regular images eventually reveals individual pixels. Vector graphics are a type of digital image which are crisp at any resolution, but until now were limited to simple designs without much colour variation. Led by former postdoctoral researcher Seungbae Bang, this new paper demonstrates how to add rich colour changes to vector graphics with high accuracy and rendering speed.

A diffusion curve image drawn with the researchers’ method demonstrates that extremely zoomed-in views maintain high accuracy. (Courtesy of the authors)

Progressive Shell Quasistatics for Unstructured Meshes

Jiayi Eris Zhang, Jérémie Dumas, Yun (Raymond) Fei, Alec Jacobson, Doug L. James, Danny M. Kaufman

Simulating the behaviour of thin shells such as metal, cloth or rubber on the computer helps people create interesting digital effects and design objects they may later manufacture. Unfortunately, previous methods are either inaccurate or super slow. Led by former undergrad Jiayi Eris Zhang, this new paper simulates thin shells progressively, so designers see accurate previews at interactive rates.

Progressive simulation of sleepy shell characters resting inside a rigid fullerene shape. Both the rigid colliders (bunny and cage) and the balloon-like characters are modelled using unstructured meshes, which are coarsened, posed by an artist, and then progressively and safely refined during Progressive Shell Quasistatics simulation. (Courtesy of the authors)

Bézier Spline Simplification Using Locally Integrated Error Metrics

Siqi Wang, Chenxi Liu, Daniele Panozzo, Denis Zorin, Alec Jacobson

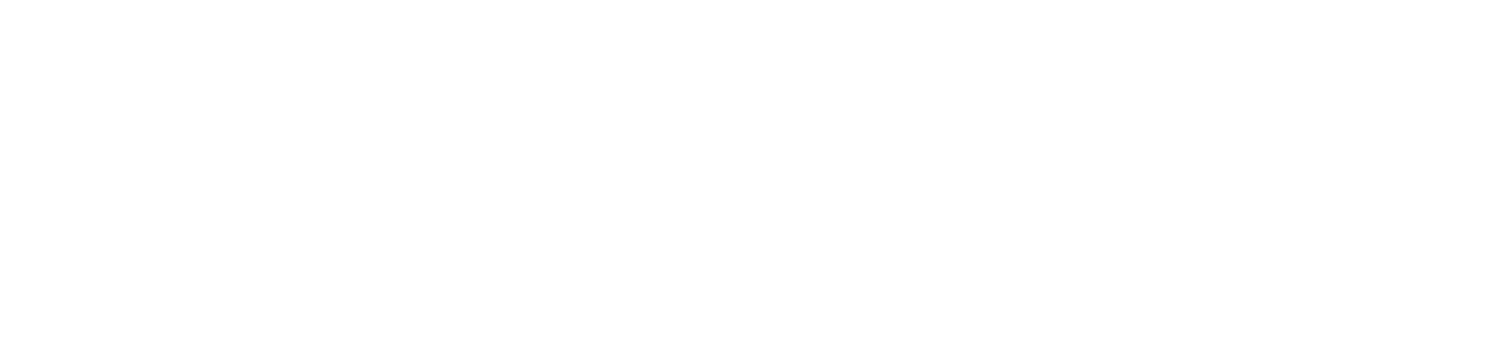

Vector graphics images are widely used in print and digital media due to their sharpness at any resolution. Simplifying these vector images is common for efficient display and storage but existing simplification methods often introduce noticeable modifications to the original designs. Our new method produces significantly compressed images either identical to or visually indistinguishable from the originals.

Researchers demonstrate a method that allows a compressed vector graphics image to look either identical to or visually indistinguishable from the originals. (Courtesy of the authors)

Reach for the Spheres: Tangency-Aware Surface Reconstruction of SDFs

Silvia Sellán, Christopher Batty, Oded Stein

In a computer, a 3D object is either stored explicitly (e.g., as a set of triangles or mesh) or implicitly (e.g., as a function like a Signed Distance Function). Each representation has its benefits and drawbacks, making converting between them a critical step of the Computer Graphics pipeline. We propose a new method for converting from implicit to explicit representations that, despite being based on very simple geometric principles, produces higher-quality reconstructions than recent state-of-the-art data driven approaches.

Reconstructing a mesh from the discrete signed distance field (SDF) of a koala (source, rightmost). By using global information from all sample points at once, the researchers’ method recovers the shape even in low resolutions where methods like Marching Cubes and Neural Dual Contouring (NDCx) produce very coarse shapes (left trio), and it recovers surface detail at higher resolutions that Marching Cubes and NDCx miss (middle trio). The researchers’ method is purely geometric, and does not require any training or storing of weights (unlike NDCx). (Courtesy of the authors)

Neural Stochastic Screened Poisson Surface Reconstruction

We use machine learning to study the uncertainty in how 3D scanners (for example, the ones on an autonomous car) perceive the world around them. Our method lets us compute critical quantities like the probability that an object will crash against a scene or the optimal position one should move a scanner next to obtain the maximum possible information about the scene.

Researchers use a neural network to quantify the reconstruction uncertainty in Poisson Surface Reconstruction (centre left), allowing them to efficiently select next sensor positions (centre right) and update the reconstruction upon capturing data (right). (Courtesy of the authors)

Constructive Solid Geometry on Neural Signed Distance Fields

Zoë Marschner, Silvia Sellán, Hsueh-Ti Derek Liu, Alec Jacobson

We introduce a method for performing geometric edits on shapes represented with neural signed distance functions (SDFs), which are functions encoded as neural networks that store geometric data implicitly. With our editing operations, we can compute neural SDFs of complex parametric shapes, similar to parametric designs constructed with computer aided design (CAD) tools. We also show how to compute neural SDFs for the region of space covered by a shape swept along a path, which can be used to create strokes from a brush in digital drawing tools.

Compact Neural Graphics Primitives with Learned Hash Probing

Towaki Takikawa , Thomas Müller, Merlin Nimier-David, Alex Evans, Sanja Fidler, Alec Jacobson, Alexander Keller

Neural fields have emerged as a general paradigm for representing scalar and vector fields compactly (in memory and storage space) by learning a neural network. In practice, neural fields are either compact but slow, or fast but bulky. We propose a neural field architecture that is compact and fast, by leveraging a novel differentiable data structure that takes inspiration from probing algorithms for hash tables. Our method has application in any system where compression algorithms are not readily available for the data at hand and data bandwidth is a usability concern.

Neural Collision Fields for Triangle Primitives

Ryan Zesch, Vismay Modi, Shinjiro Sueda, David I.W. Levin

Explore exciting advancements in physics simulation with ‘Neural Collision Fields for Triangle Primitives’ by U of T’s David I.W. Levin and Vismay Modi, alongside Texas A&M University collaborators Ryan Zesch and Shinjiro Sueda. This paper harnesses the power of artificial intelligence to enhance object collisions, circumventing challenges posed by traditional methods of resolving collisions. By training a neural network to repel triangles which are in contact, their method is able to handle complex simulations with ease.

Researchers propose a new smoothed surface integral formulation for collision detection and resolution between triangle meshes, along with a neural integrated triangle-triangle collision primitive for use during dynamic simulations of elastica. (Courtesy of the authors)

ViCMA: Visual Control of Multibody Animations

Doug L. James, David I.W Levin

Control of large-scale, multibody physics-based animations is difficult. Optimization-based approaches are computationally daunting, and as the number of interacting objects increases, they can even fail to find satisfactory solutions. Browsing techniques avoid some of these difficulties but are still time and computation intensive. We present a new, complementary method for controlling such animations. We demonstrate our method on a number of large-scale, contact rich examples involving both rigid and deformable bodies.

Researchers present a new method to visually control multibody animations. This method uses object motion and visibility, and its overall cost is comparable to a single simulation. (Courtesy of the authors)

Subspace Mixed Finite Elements for Real-Time Heterogeneous Elastodynamics

Ty Trusty, Otman Benchekroun, Eitan Grinspun, Danny Kaufman, David I.W Levin

Our world is composed of both soft elements, such as muscles, and rigid components like bones. Traditional techniques in physics simulation, especially those optimized for speedy “real-time” experiences, typically focus on one type of material at a time, either soft or hard, leading to a compromise in visual quality or performance. Attempting to apply these methods to mixed materials often results in sluggish gameplay or a significant drop in animation quality. We propose a simulation technique that manages to maintain real-time responsiveness while capturing the dynamic nature of mixed materials. This breakthrough allows for the seamless integration of detailed and realistic movements in virtual environments. We can now successfully simulate a highly intricate mammoth model, composed of both bone and muscle, from the Smithsonian at real-time frame rates.

Researchers demonstrate a modelling method that can create real-time simulations of diverse materials, such as a mammoth model, composed of both bone and muscle. (Courtesy of the authors)

iNVS: Repurposing Diffusion Inpainters for Novel View Synthesis

Yash Kant, Aliaksandr Siarohin, Michael Vasilkovsky, Riza Alp Guler, Jian Ren, Sergey Tulyakov, Igor Gilitschenski

Our work, iNVS showcases an innovative approach for novel view synthesis from a single image. It employs 3D-splatting guided by monocular depth to transfer source pixels to a novel viewpoint, and uses a 3D-aware Stable Diffusion Inpainter to create novel views. iNVS can improve the creation of 3D assets, maintaining the integrity and detail of the original image and is particularly beneficial in applications like virtual reality and digital content creation.

iNVS can synthesize novel views from a single image while preserving sharp details such as text and texture well. (Courtesy of the authors)

Neural Stress Fields for Reduced-order Elastoplasticity and Fracture

Zeshung Zong, Xuan Li, Minchen Li, Maurizio M. Chiaramonte, Wojciech Matusik, Eitan Grinspun, Kevin Carlberg, Chenfanfu Jiang, Peter Yichen Chen

The paper presents a new method that combines neural networks with physics to simulate materials like metals and fluids more quickly and with less computer memory. This approach is particularly useful for virtual reality and other applications where speed and efficiency are crucial. It achieves this by simplifying the complex calculations needed for these simulations, making them up to 100,000 times smaller in dimension and 10 times faster.

The researchers’ method accurately captures modelling of tearing and other damage phenomena to everyday materials such as bread. (Courtesy of the authors)

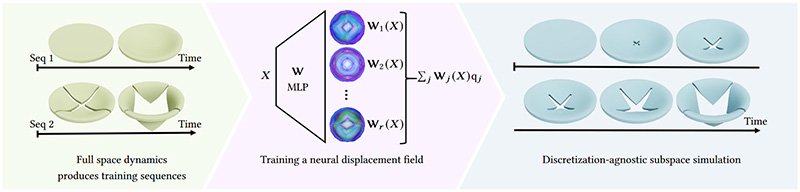

LiCROM: Linear-Subspace Continuous Reduced Order Modeling with Neural Fields

Yue Chang, Peter Yichen Chen, Zhecheng Wang, Maurizio M. Chiaramonte, Kevin Carlberg, Eitan Grinspun

There are some existing methods that can speed up simulations significantly by simplifying things, but these methods face limitations — they can only work with specific shapes and can't handle changes like cutting, changing geometry, or swapping characters. We've introduced a new method that allows us to do that, making simulations more flexible and up to 56 times faster.

Through a new workflow, the researchers are able to speed up simulations by 100 times that could not be sped up before, particularly simulations that involve cutting, punching out holes, or other forms of changes to the geometry. (Courtesy of the authors)

To read more, see the list of accepted technical papers by DCS-affiliated authors by visiting University of Toronto’s webpage on SIGGRAPH Asia 2023’s website.